Integration tests are slow and difficult to maintain because they have substantially more system touch points than unit tests and hence change more often. These elaborate or sophisticated tests provide a role that unit tests cannot replace, thus there is no way to avoid creating them while focusing solely on unit tests. Because their failure is unpleasant because they are complicated, we chose to look at the most prevalent mistakes (as identified by the community) that set you up for failure and how to prevent them.

Through all the smoke, you can't see the fire.

What exactly is smoke testing, and how will it benefit me?

Smoke testing is exactly what it sounds like: switch it on and see if it produces any smoke. If there is smoke, there is no need to continue testing. This is the most fundamental quality gate, ensuring that your application's core functionality is operational. The outcome of a failed smoke test should always be the same, dead on delivery, and this is the easiest method to determine if a test should be classified as a smoke test or a performance, regression, or other type of test.

So, why is it critical to accurately classify a test as a smoke test? Because smoke tests are the most fundamental of quality gates, they must be run consistently and constantly, which means they take time to execute and are bottlenecks to higher level testing. As a result, when it comes to smoke testing, less is more. If you do not keep to your primary route functionality tests, you will fail.

- Your smoke tests will take too long to run, preventing you from failing quickly.

- There will be so much smoke that you will be unable to see the fire.

"Check if a user can sign up" is a nice example of a smoke test. Despite the statement's simplicity, the test may involve several layers of your application: Is the load balancer functioning properly? Is the page rendered? Is it possible to communicate with the database? Can we locate any pertinent records?

Smoke tests aren't about identifying and resolving issues; they're about identifying show-stoppers that should, ideally, be stopped from production and/or rectified right away. Performance and regression testing should each have their own home in your testing suite, but they should not be confused with smoke testing. KISS is the greatest rule of thumb here.

Your test suite design is awful

If you're wondering if tests may be replaced or substituted for one another, you should consider that your test suite architecture may be flawed. Also Unit testing, on the other hand, has become faster and more efficient as automation technologies as Selenium have grown in popularity.

Unit tests are the most cost-effective in terms of development time, length, and upkeep. The higher a test runs in the stack, the higher the cost of upkeep and execution. End-to-end tests are more expensive since they require a whole stack to run, and their initialization might take several minutes. Debugging unit tests is also lot easier than debugging an end-to-end test since they are on the unit level. All levels of testing provide value, but if the same condition can be asserted at two different levels, choose the lower and less expensive one.

Having said that, when researching this topic, we discovered something unusual in our own test results. To add some context, we work in TDD, therefore we always write unit tests and follow unit testing techniques and best practices. Second, we examine not just unit test code coverage but also code coverage at all test levels and aggregate code coverage using the TestQuality Analize main tab option, and one of its main subtabs, the one that meassures TestQuality. Based on the execution of your tests, which were useful and those that were not as useful. You will also see those tests that are highlighted for quality reasons. Tests that have not been run yet etc. That is, we can observe the real consequences of the preceding explanation.

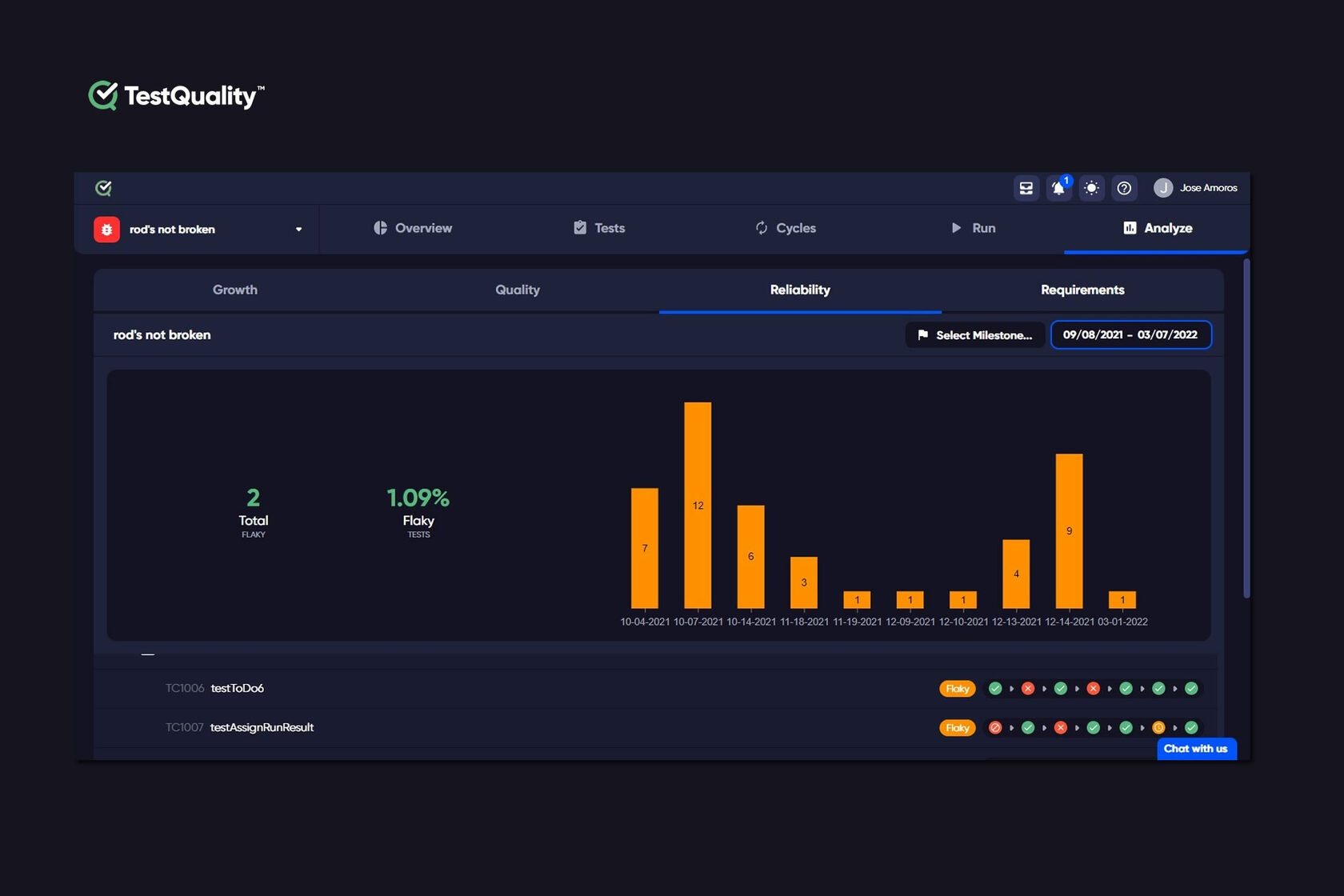

TestQuality's Analyze main Tab offers several test measurement options one of them is directly focus to show us how flaky our tests are. This option within the Analyze main menu tab is the Test Reliability tab that will help you identify those tests that are flaky. In the graph each test's flakiness is displayed as icons.

TestQuality's Test Reliability analisys to detect Test Flakiness

We were shocked to see that, while working in TDD and deliberately attempting to avoid test overlaps (there are some circumstances, such as negative tests, when overlaps are beneficial), our unit and integration tests asserted the same conditions. While this wasn’t causing our integration tests to fail our test suite was neither effective nor efficient. Even using TDD with "proper" test suite designs does not guarantee that you are optimizing your effectiveness. As a result, we now plan our test optimization and development using our coverage numbers.

Do you know what your tests don't cover?

Poor Implementation, Good Methodologies

- Automate Everything: At this time, we know the important advantages of automated testing for an effective product release, something which is widely recognized as a truth. However, if you’re not writing tests first it is very easy to over-engineer your automation. Unit tests, in particular, need careful code design (or even redesign). Some components are less testable than others, although smaller units should be easier to evaluate if they can be detached.

- Manual Execution of Complicated Tests: What is the point of developing sophisticated, time-consuming, costly, and repetitive tests if they must be executed manually? Manual tests are error-prone and not only automated tests can save time, but they also allow you to focus on problems, analyze test findings, and debug. That is, you spend more time responding to automated test failures than remembering to run all relevant tests, verify the output, and compare it to the prior run.

- Diving Deep with Databases: When diving deep with databases, but also with most external resources, it is important to limit testing dependencies to a minimal. Stick with SQLite if an application can run with it throughout testing. Setting up and connecting to an actual database or service may take effort and provide little benefit. That is not to say that integration with the external resource should not be considered. It should, however it should be done separately from the integration of your own components.

- Reliability: (aka flakiness) It's only a question of time until people quit using tests if they fail often. While it may be impossible to maintain all tests running at 100% all of the time, not performing tests at all is not the solution. Repairing them is. If correcting a test is an expensive task, it may be preferable to deactivate the test rather than produce information noise. Thanks to TestQuality you can meassuer how flaky your tests are and get answers if they fail and then succeed many times or if they are useful to you, or will they be ignored by your team. Test reliability will help you identify those tests that are flaky with TestQuality Analyze.

Finally, don't go overboard. Every test must have a commercial objective. Testing for the sake of testing is ineffective. Code coverage is a helpful indicator, but verifying that “return 4 returns 4” is not a wise investment of anyone’s time.

Unfriendly Infrastructure

Your tests may not be badly designed, yet they may nevertheless run poorly on testing infrastructure. Other times, you might not have considered the nuances of an automated environment. In any case, the infrastructure on which the tests run is just as critical as the tests themselves. There must be extremely clear communication across business divisions in order to avoid time being wasted on parallel tasks. While computer time is less expensive than physical labor, this should not be used to justify developing inefficient code.

Invest in your infrastructure in the same way you would in hiring new employees: ensure that the requirements are met, in this case, the testing requirements. Running e2e tests may necessitate a significant amount of memory and processing power. Testing on virtual computers may be more difficult, especially when a graphical user interface is involved. Don't make your infrastructure a bottleneck in the release process. When tests are stuck in an execution queue or taking too long to finish, just add extra computers. It doesn't take long to spin up additional AWS instances, for example, and engineers' time is more valuable.

Your QA specialists in testing and software tester team can increase Test Efficiency with TestQuality since any of your teams add new tests every week, and around twice a year, we find that the overall test duration has grown too long, so many of you add hardware resources for automated testing or employees for human and free-play testing.

TestQuality can simplify test case creation and organization, it offers a very competitive price but it is free when used with GitHub free repositories providing Rich and flexible reporting that can help you to visualize and understand where you and your dev or QA Team are at in your project's quality lifecycle. But also look for analytics that can help identify the quality and effectiveness of your test cases and testing efforts to ensure you're building and executing the most effective tests for your efforts.